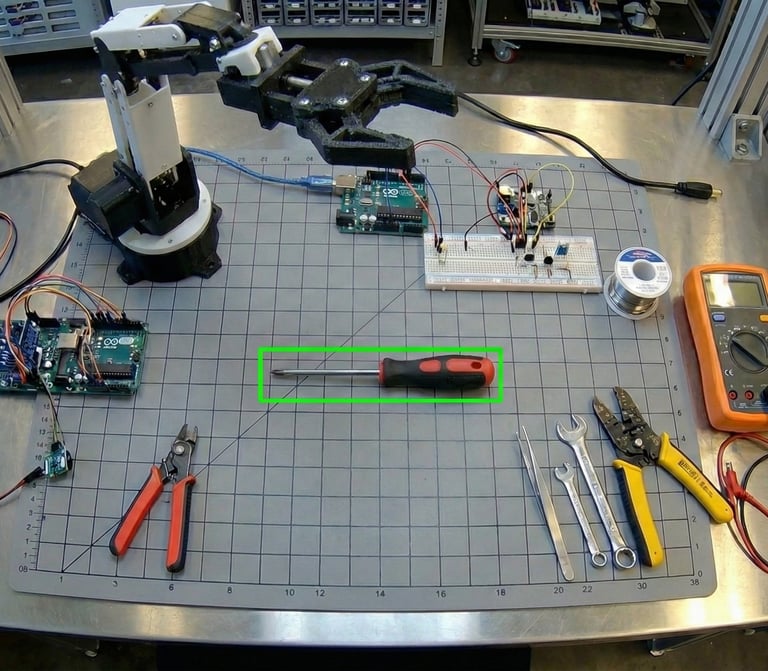

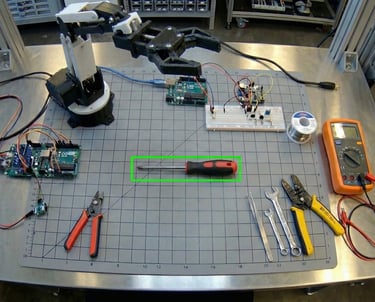

Robotic Arm / Computer Vision VLM

I developed a computer vision system that combines CLIP and SAM models for object grounding and detection on robotic arm, enabling real-world object recognition based on natural language input. Integrated an NLP layer using spaCy to parse and interpret commands such as "pick the screwdriver" or "take the controller device from the table". The system bridges vision and language to allow a robot to understand and execute spoken or written instructions in real-world environments.